Whatispiping Team, in association with Everyeng, is conducting an online pre-recorded Comprehensive Piping Stress Analysis Certificate course to help mechanical and piping engineers. Along with the regular content that the participants will be learning, there will be a dedicated 2-hour doubt-clearing session (/question-answer session) with the mentor.

Contents of Online Piping Stress Analysis with Caesar II Course

The program will be delivered using the most widely used pipe stress analysis software program, Caesar II. The full course is divided into 4 parts.

- Part A will describe the basic requirements of pipe stress analysis and will help the participants to be prepared for the application of the software package.

- Part B will describe all the basic static analysis methods that every pipe stress stress engineer must know.

- Part C will give some understanding of dynamic analysis modules available in Caesar II; and

- Part D will explain all other relevant details that will prepare a basic pipe stress engineer to become an advanced user. Additional modules will be added in this section as and when ready.

In its present form, the full course will roughly cover the following details:

Part A: Basics of Pipe Stress Analysis

- What is Pipe Stress Analysis?

- Stress Critical Line List Preparation with Practical Case Study

- Inputs Required for Pipe Stress Analysis

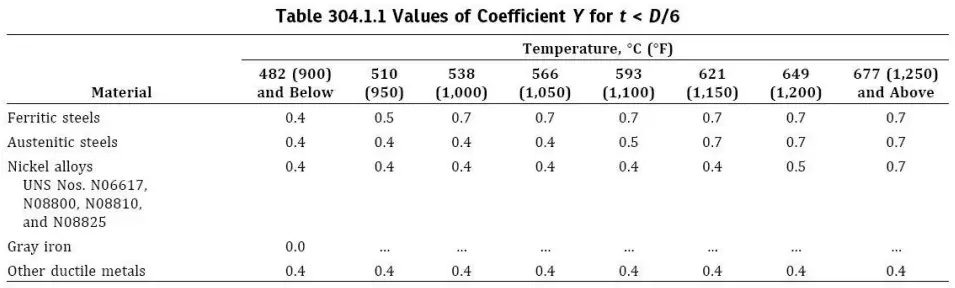

- Basics of ASME B31 3 for a Piping Stress Engineer

- ASME B31.3 Scopes and Exclusions

- Why stress is generated in a piping system

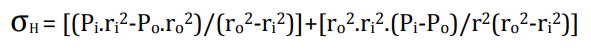

- Types of Pipe Stresses

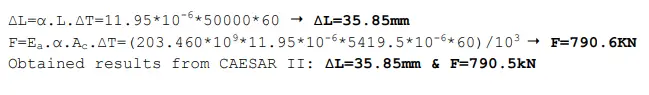

- Pipe Thickness Calculation

- Reinforcement Requirements

- ASME B31.3 Code Equations and Allowable

- Introduction to Pipe Supports

- Role of Pipe Supports in Piping Design

- Types of Pipe Supports

- List of Pipe Supports

- Pipe Support Span

- How to Support a Pipe?

- Pipe Support Optimization Rules

- Pipe Support Standard

- Support Engineering Considerations

- What is a Piping Isometric?

- What is an Expansion Loop?

- Bonus Lecture: Introduction to Pipe Stress

- Bonus Lecture: Pressure Stresses in Piping

Part-B: Static Analysis in Caesar II

- Introduction to Caesar II

- Getting Started in Caesar II

- Stress Analysis of Pump Piping System

- Creating Load Cases

- Wind and Seismic Analysis

- Generating Stress Analysis Reports

- Editing Stress Analysis Model

- Spring Hanger Selection and Design in Caesar II

- Introduction

- Types of Spring Hangers

- Components of a Spring Hanger

- Selection of Variable and Constant Spring hangers

- Case Study of Spring Hanger Design and Selection

- Certain Salient Points

- Flange Leakage Analysis in Caesar II

- Introduction

- Types of Flange Leakage Analysis and Background Theory

- Case Study-Pressure Equivalent Analysis

- Case Study-NC Method

- Case Study-ASME Sec VIII method

- Stress Analysis of PSV Piping System

- Introduction

- PSV Reaction Force Calculation

- Applying PSV Reaction force

- Practical Case Study

- Certain best practices

- Heat Exchanger Pipe Stress Analysis

- Introduction

- Creating Temperature Profile

- Modeling the Heat Exchanger

- Nozzle Load Qualification

- Practical Case Study

- Methodology for shell and tube inlet nozzle stress analysis

- Vertical Tower Piping Stress Analysis

- Introduction

- Creating Temperature Profile

- Equipment Modeling

- Modeling Cleat Supports

- Skirt temperature Calculation

- Nozzle Load Qualification

- Practical Example

- Storage Tank Piping Stress Analysis

- Introduction

- Reason for Criticality of storage tank piping

- Tank Settlement

- Tank Bulging

- Practical example of tank piping stress analysis

- Nozzle Loading

- Pump Piping Stress Analysis

- API610 Pump nozzle evaluation using Caesar II

Part C: Dynamic Analysis is Caesar II

- Introduction-Dynamic Analysis in Caesar II

- Types of Dynamic Analysis

- Static vs Dynamic Analysis

- Dynamic Modal Analysis

- Equivalent Static Slug Flow Analysis

- Dynamic Response Spectrum Analysis

Part D: Miscellaneous other details

- WRC 297/537 Calculation

- What are WRC 537 and WRC 297?

- Inputs for WRC Calculation

- WRC Calculation with Practical Example

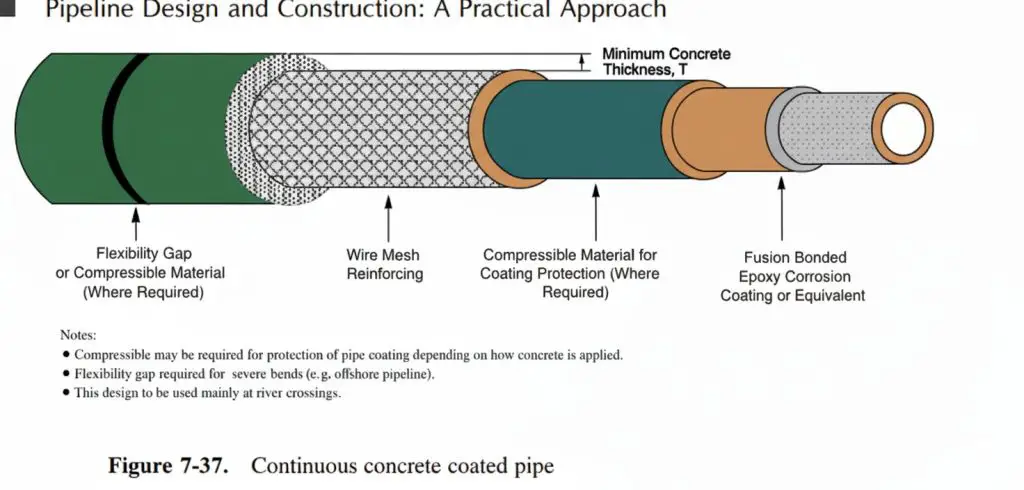

- Underground Pipe Stress Analysis

- Jacketed Piping Stress Analysis

- Create Unit and configuration file in CAESAR II

- ASME B31J for improved Method for i, k Calculation in Caesar II

- Discussion about certain Questions and Answers

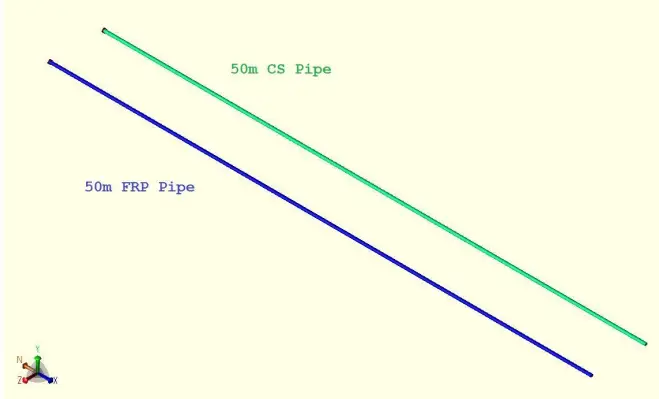

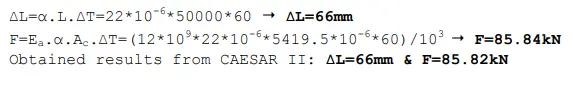

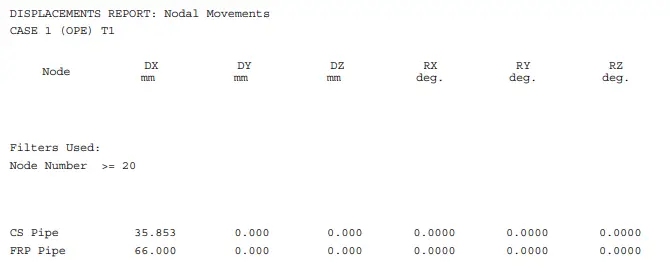

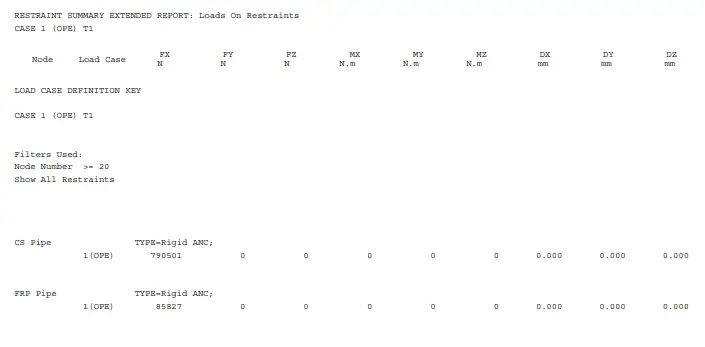

- GRE/FRP Pipe stress analysis

- GRE Pipe Stress Analysis using Caesar II

- GRE Stress Analysis-Basics

- FRP Pipe Stress Analysis Case Study

- GRE Flange Leakage Analysis

- Meaning of Stress Envelope; Understand it

- Reviewing A Piping Stress System

- Introduction

- What to Review

- Reviewing Steps

- Case Study of Reviewing Pipe Stress Analysis Report

- Reviewing Best Practices

- FIV Study

- Flow Induced Vibrations-Introduction

- What is Flow-Induced Vibration (FIV)?

- Flow-Induced Vibration Analysis

- Corrective-Mitigation Options

- AIV Study

- Introduction

- What is Acoustic-Induced Vibration (AIV)?

- Acoustic-Induced Vibration Analysis

- Corrective-Mitigation Options

How to Enroll for this Course

To join this course, simply click here and click on Buy Now. It will ask you to create your profile, complete the profile, and make the payment. As soon as the payment is complete, you will get full access to the course. If you face any difficulty, contact the Everyeng team using the Contact Us button on their website.